Acoustic metamaterials and machine learning beat the diffraction limit

25 Aug 2020

A system that reconstructs and classifies acoustic images with far smaller features than the wavelength of sound they emit has been developed by Bakhtiyar Orazbayev and Romain Fleury at the Swiss Federal Institute of Technology in Lausanne. Their technique beats the diffraction limit by combining a metamaterial lens with machine learning and could be adapted to work with light. The research could lead to new advances in image analysis and object classification, particularly in biomedical imaging.

The diffraction limit is a fundamental constraint on using light or sound waves to image tiny objects. If the separation between two features is smaller than about half the wavelength of the light or sound used, then the features cannot be resolved using conventional techniques.

One way of beating the diffraction limit is to use near-field waves that only propagate very short distances from an illuminated object and carry subwavelength spatial information. Metamaterial lenses have been used to amplify near-field waves, allowing them to propagate over longer distances in imaging systems. However, this approach is prone to heavy losses, resulting in noisy final images.

Hidden structures

In their study, Orazbayev and Fleury improved on this metamaterial technique by combining it with machine learning – through which neural networks can be trained to discover intricate hidden structures within large, complex datasets.

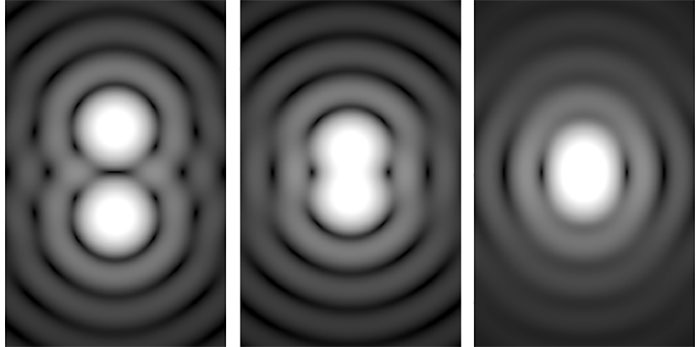

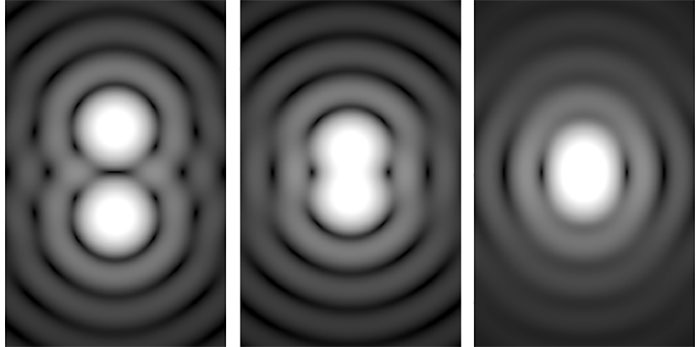

The duo first trained a neural network with a database of 70,000 variations of the digits 0-9. They then displayed the digits acoustically using an 8X8 array of loudspeakers measuring 25 cm across, with the amplitude of each speaker representing the brightness of one pixel. In addition, they placed a lossy metamaterial lens in front of the speaker array, consisting of a cluster of sub-wavelength plastic spheres (see figure). These structures acted as resonant cavities, which coupled to the decaying near-field waves to amplify them over long distances.READ MORE

With an array of microphones placed several metres away, Orazbayev and Fleury picked up the amplitudes and phases of the resulting waves, which they fed into two separate variations of their neural network: one to reconstruct the images, and the other to classify specific digits. Even when the most subtle features of the displayed digits were 30 times smaller than the wavelength of sound emitted by the speakers, the algorithms were able to classify images they had never seen before to within an accuracy of almost 80%.

Orazbayev and Fleury now hope to implement their technique using light waves. This will allow them to apply their neural networks to cellular-scale biological structures; quickly producing high-resolution images with little required processing power. If achieved, such a technique could open new opportunities for biomedical imaging, with potential applications ranging from cancer detection to early pregnancy tests.

The research is described in Physical Review X.

from physicsworld.com 7/9/2020

Δεν υπάρχουν σχόλια:

Δημοσίευση σχολίου