Deep learning improves fibre optic imaging

01 Oct 2018 Belle Dumé

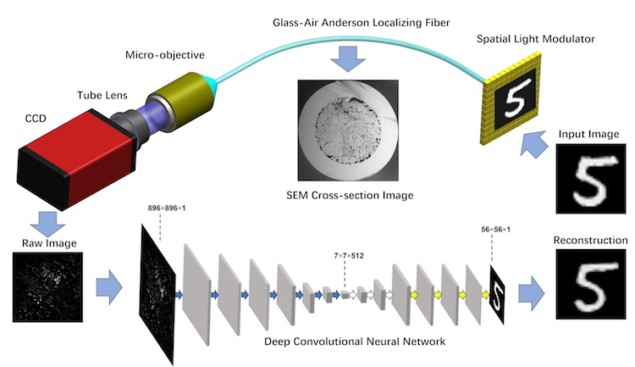

A new flexible, artifact-free and lensless fibre-based imager can reconstruct high-quality images thanks to a trained deep neural network. The device, which is the first of its kind, transmits the images through disordered fibres thanks to an effect called transverse Anderson localization and works even for objects that are located several millimetres away from the fibre input face without the need for any additional distal optics. It might find use in practical endoscopy and other imaging applications.

Fibre optical endoscopes (FOEs) are routinely employed in biomedical research and for diagnosing disease and in surgery. They can be used in situations where conventional microscopy does not work very well. FOEs can also be implanted in patients, so allowing their health to be monitored over the long term.

Researchers have recently improved how light is transmitted through these devices using a novel light transmission scheme called transverse Anderson localization. This effect prevents the spread of light in the direction perpendicular to the direction in which light is propagating by a randomly disordered glass-air fibre cross-section.

“Ordinary” Anderson localization is named after US physicist Philip Warren Anderson, who was the first to identify the effect back in 1958 as the interference of waves scattering from random impurities in a crystal. This interference can abruptly halt (or “localize”) the propagation of the wave. Anderson subsequently went on to win the 1977 Nobel Prize for Physics for his work. The transverse Anderson localization effect was first identified in 2008 by Moti Segev and colleagues at the Technion – Israel Institute of Technology.

GALOF

The glass-air Anderson localized optical fibre (GALOF) scheme has many advantages over conventional multimode fibres (MMFs). For one, it can support thousands of optical modes in a random structure and unlike MMFs, these optical modes are oblivious to whether the fibre is bent or straight. What is more, imaging can be done without extra lenses or mechanical parts – as long as the object is positioned next to the input face of the GALOF.

GALOF can also directly transit high-quality images though fibres as short as a metre long thanks to its low attenuation of less than 1 dB/m for visible wavelengths. These images are comparable to those obtained though some of the best commercial fibre bundles available today.

Current GALOF-based FOEs are still far from perfect though. One of the main problems is that the image plane of an object needs to be located in the direct vicinity of the GALOf’s input face, as mentioned.

Enter deep learning technology

A team of researchers led by Axel Schülzgen at the College of Optics and Photonics at the University of Central Florida in Orlando has now employed deep learning technology to overcome this problem to create a flexible, lensless FOE that produces artifact-free, high quality images by combining GALOFs with deep learning algorithms.

Deep learning is a relatively new field of research that is already being applied to solve a number of imaging-related problems. “In contrast to conventional methods of optimizing images, deep learning algorithms can ‘learn’ how complicated optical waves propagate through the whole imaging set up without having any prior knowledge of them,” explains Jian Zhao, who is lead author of this study.

“In our work, we applied a particular type of deep neural network called a convolutional neural network (CNN). We combined this algorithm for image reconstruction with a specially designed GALOF (made of silica) for image transmission. This fibre consists of a random mix of tiny glass structured and air voids. Since the trained network ‘understands’ the physics of the image transport system, no lenses or other optical elements are needed to relay images.”

The researchers made their GALOF using fused-silica tubes and a tried-and-trusted stack-and-draw fabrication technique. The diameter of the random structure is about 278 μm and the air-hole filing fraction in the structure around 28.5%. It is 90 cm long.

Transverse Anderson localization

To generate images, they used a beam of laser light with a wavelength of 405 nm. As light passes down the fibre, it cannot scatter into the plane normal (or “transverse” to the direction of light propagation) because of the fibre’s random structure. Since the disorder does not continue along the length of the fibre, however, the light is free to travel along this direction.

To show that the technique works, the team sent images measuring tens of microns across along a section of the fibre. They created the images by a placing a small stencil containing the letters UCF (short for “University of Central Florida”) and CREOL (short for “College of Optics and Photonics”) as the object. The image size of the object is 112 × 200 pixels and the stencils were obtained from the Modified National Institute of Standards and Technology (MNIST) database of handwritten digits.

Once the images had come out from the opposite end of the fibre, the researchers demagnified them by a factor of four and then captured them on a CCD detector.

Unique properties

“Our new system has three unique properties,” Zhao tells Physics World. “First, it transmits high quality images without any artifcacts. Second, no distal optical elements are needed to image objects that are not completely adjacent to the fibre facet and that are even several millimetres away. And third, the same image reconstruction algorithm can be used whether the fibre is bent or straight. This means no time-consuming retraining of the neural network is necessary, even if the fibre is bent by 90°.

“Combining image transmission and reconstruction is a very active area of research and integrating advanced fibre designs and deep learning strategies in this way will improve the performance of next-generation imaging systems,” he says. “Thanks to the exceptional features of our system, we hope that it will help in the design of future micro-endoscopic devices that are minimally invasive.”

The team, reporting its work in ACS Photonics 0.1021/acsphotonics.8b00832, says that it is now busy optimizing its device and using it to image biological objects, such as various types of cells. “We are also working on making a movie to demonstrate video rate image transmission and retransmission,” reveals Zhao. “In the future, we would like to look into the possibility of 3D imaging with our device, but to do this we will need to develop new deep learning algorithms and experimental systems.

“We would of course also like to collaborate with medical experts to tailor our system to their requirements.”

1/10/2018 from physicsworld.com

Δεν υπάρχουν σχόλια:

Δημοσίευση σχολίου